Twitter quietly kills suspected firearm-themed bot network

One minute, they were cranking out an endless stream of tweets, pushing conservative-leaning news articles or fiery video commentary. The next, they were gone, without a trace or an explanation.

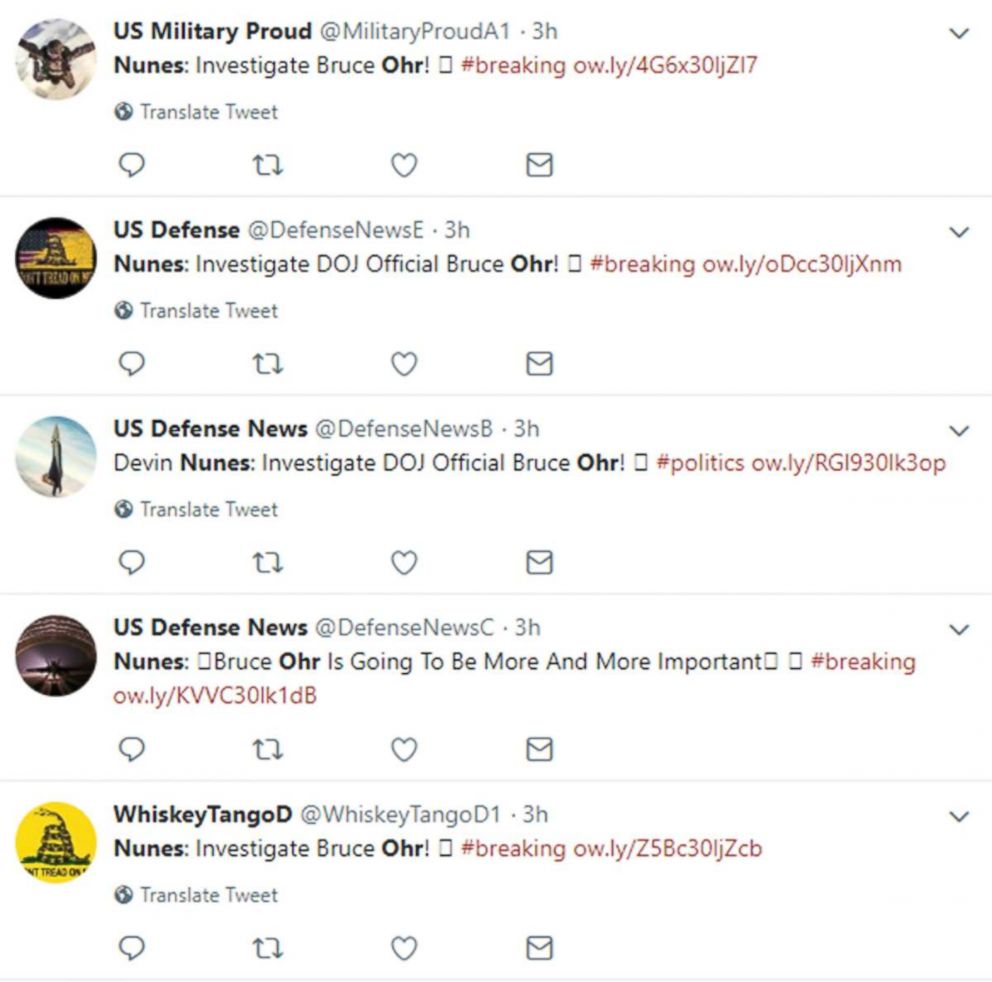

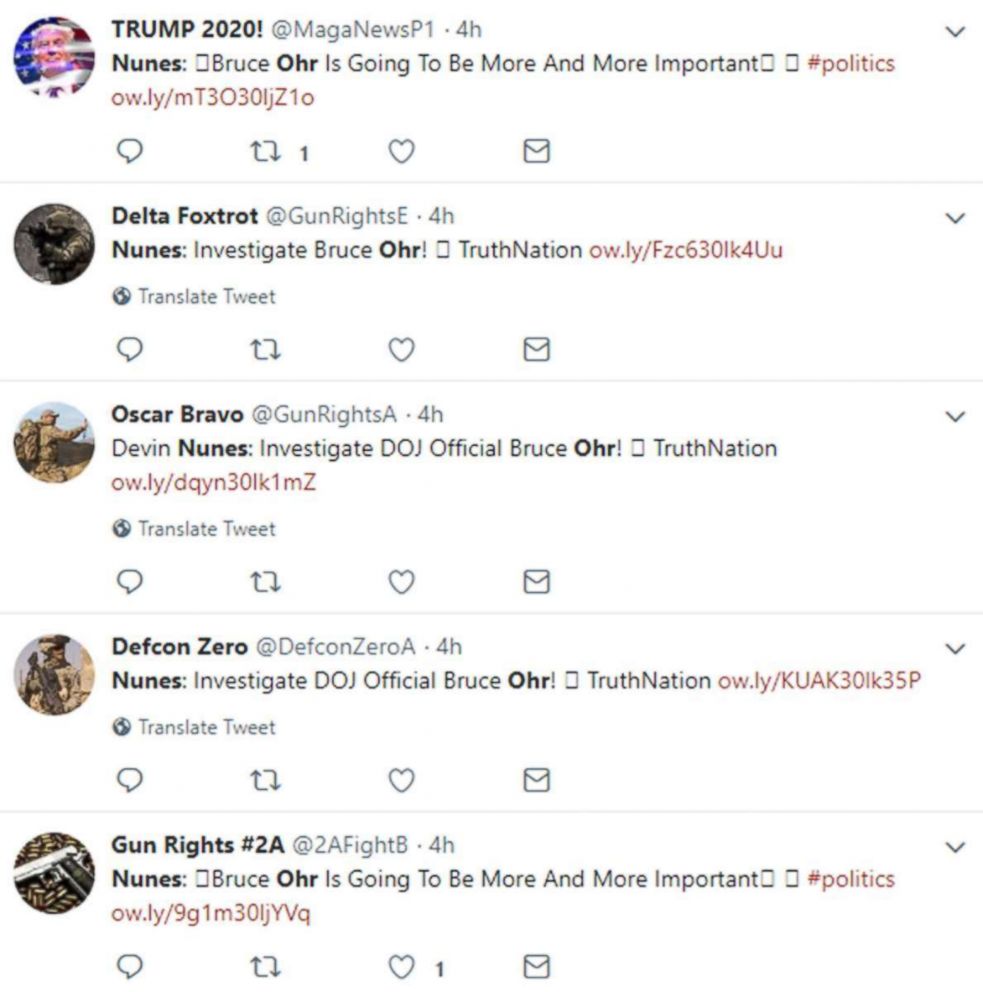

Twitter took down what experts told ABC News was almost certainly a bot network last Friday, one that impersonated military or firearms enthusiasts with account handles like "@TacReviewD," "@MilitaryProudA1," "@PatriotsTacA" and "@WhiskeyTangoD."

The demise of the linked, likely preprogrammed profiles is one of the latest casualties in Twitter's six-month siege against inauthentic, and often politically provocative, accounts, opening a window into what one expert called the ever-evolving "cat and mouse" nature of bot-hunting.

"Twitter has definitely upped its game when it comes to dealing with botnets," said Ben Nimmo, a fellow at the Atlantic Council's Digital Forensic Research Lab. "So the bot-herders [who create and program the accounts] have upped their game too."

Before its removal, the suspected bot network was probably not very large -- numbering just over 100 accounts in Nimmo's estimation -- but it was hardworking. Some of the accounts, which had been live for a little more than a year, tweeted nearly 30,000 times.

The timing of similar tweets between accounts appeared staggered, leading Nimmo to believe that as rudimentary as the suspected alleged bot network was, someone at least took some time to try to duck Twitter's bot-detecting tools. And it worked -- for a while.

Amid concerns that inauthentic accounts were increasingly polluting social media platforms and in the wake of revelations that Russia allegedly attempted to spread divisiveness on social media ahead of the 2016 U.S. election, Twitter announced in February it would be cracking down on bots and other automated feeds.

"Keeping Twitter safe and free from spam is a top priority for us," Yoel Roth, Twitter's head of site integrity, said in an announcement at the time. "One of the most common spam violations we see is the use of multiple accounts and the Twitter developer platform to attempt to artificially amplify or inflate the prominence of certain Tweets."

Twitter dismantled most of the firearms-themed network Friday, but the social media giant declined to provide any additional information about it or how it came to the company's attention. Nicholas Pacilio, a company spokesperson, confirmed to ABC News in an email that some accounts in the network had been suspended, but said that there's not "much more I'm able to say."

Pacilio did not respond to follow-up questions, including about how many bot networks the company has dismantled, beyond pointing to a company blog post from May that said fewer than 1 percent of accounts make up the majority of those reported for abuse.

Bret Schafer, a social media analyst for the Alliance for Securing Democracy, reviewed some of the purported bot accounts for ABC News and said he thought they were "very suspicious." He ran a few handles through an online bot-detecting tool developed by Indiana University’s Network Science Institute, and the tool flagged each as likely non-human.

Nimmo said that from the information available, it's hard to tell who might've been behind the network beyond the suggestion that it was a small operation, potentially run by a single person -- nothing like the scale of the coordinated influence operation Russia allegedly ran online ahead of the election.

But it's clear the fight against bots and botnets has entered a new phase. As Twitter continues its crackdown, coders behind the bots are trying to outsmart Twitter's algorithms by tweaking the volume, timing, content and even style of the robot-written posts.

"Bot herders are trying to second guess what Twitter is looking for and get ahead of it," Nimmo said.

That's not to say that the firearms-themed network was that well-hidden. The account handles were often re-used, only with the letter A, B, C, D, or E added on the end. The profile photos often showed individuals with firearms, but a quick reverse image search on a few photos showed they had apparently been lifted from firearms websites.

And, in perhaps the clearest sign that these Twitter users perhaps weren’t who they appeared to be, the accounts posted links to a single website -- a bare bones conservative website, where a few articles and videos lived alongside ads hawking commemorative Donald Trump coins or tactical pens and flashlights -- at regular intervals with the kind of consistency and precision uncommon to human users. GoDaddy, the domain registration firm that is listed on the website’s public register, declined to identify the owner, citing its privacy policy.

Last week, for example, the network produced a deluge of tweets with links to a video showing Rep. Devin Nunes (R-Calif.) calling for an investigation into a senior Justice Department official -- a revamped line of attack by critics of the ongoing probe of Russian interference in the 2016 election.

"That's classic bot behavior," said Nimmo, who analyzed archived data related to the suspected network at ABC News’ request. "Seeing a single post do that is entirely innocuous, but when you see an account and you're scrolling down it and every time the post is the exact wording of the headline with the source at the end, it looks like someone has automated that process."

Darren Linvill, a Clemson University associate professor who recently made headlines with his colleague Patrick Warren and their analysis of millions of tweets linked to the purported Russian operation, told ABC News he agreed the firearms-themed network was likely a small effort, but added that it was always possible it was just a piece of a larger operation that shifted its focus from time to time based on real-world events.

Linvill said one lesson he learned from analyzing the Russian cache that it’s important to identify and analyze current troll and bot networks in as close to real time as possible, because the technical tactics in that cat-and-mouse game are always changing.

“It’s difficult because what these trolls were doing in 2014 is totally different to what they were doing in 2015 and 2016,” he said. “That’s one of the main takeaways from our dataset, how their efforts change over time.”