I posted on Instagram about my anti-Semitic trolls and their persistent abuse. Instagram deleted my post: OPINION

Kate Friedman-Siegel is the voice behind the Instagram sensation, @CrazyJewishMom. Opinions expressed in this column do not necessarily reflect the views of ABC News.

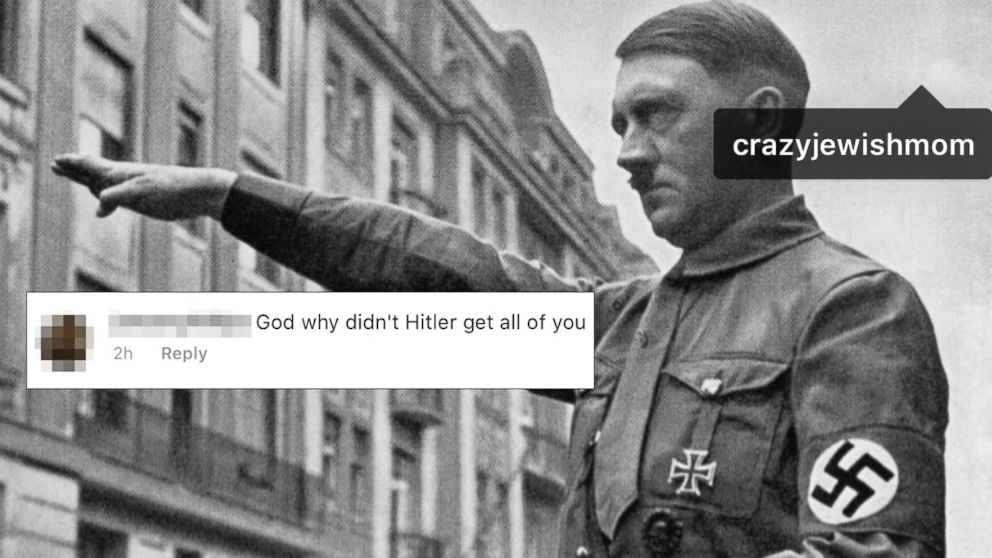

On Saturday, the day of the deadliest attack on the Jewish community in American history, I decided to post these images depicting a swastika, and a barbecue grill labeled “Jewish Stroller,” a clear nod to the gas chambers of the holocaust on my Instagram account.

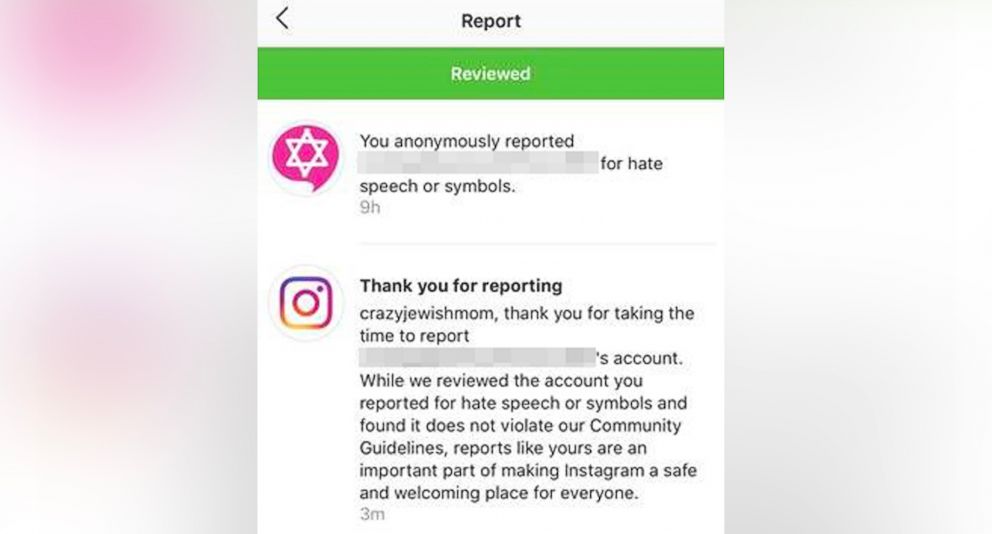

Why did I choose to post these threatening, anti-Semitic images to Instagram? Because the first one was sent to me by the same account that posted the second one, and when I tried to report them to Instagram as hate speech, Instagram ruled that these images didn’t violate their community guidelines. (Guess the reviewer thought the account that sent me these images was just a friendly stranger inviting me to a cookout!).

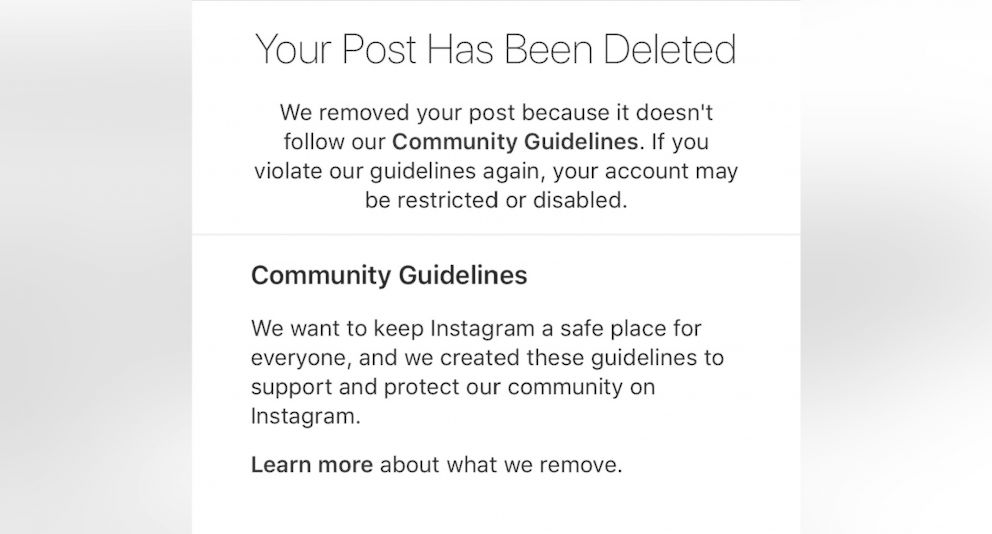

This sequence of events makes it even more difficult to understand what happened next: About 30-40 minutes after I shared it on my page, Instagram deleted the post from my account, notified me that it had violated community guidelines, and threatened to disable my page in this notice that popped up when I refreshed my feed:

I’m not a tone-deaf, sociopathic Aryan race warrior who wants to rub salt in the wound inflicted on the Jewish people in Pittsburgh and around the world. I’m a proudly and publicly Jewish person who runs a humorous Instagram account called @CrazyJewishMom, where I post funny memes and mortifying conversations with my neurotic, Jewish mom. My intent with sharing that post was not to cause anyone pain, but rather to highlight the problem with hate speech regulation on Instagram.

This kind of digital hate is nothing new for me. I receive an abundance of Hitler-y, Swastika-filled harassment like this (Seriously, I have a folder of images on my phone FULL of white supremacist propaganda screenshots and maintain a direct line to the Anti Defamation League, who've asked me to keep track of the hate speech I receive).

After Instagram removed that first post I hoped would start a conversation about how hate speech is regulated on the platform, I shared this update:

After a great deal of outrage from my followers poured out in the form of comments on this new post, I guess Instagram made the decision to restore the original post they had deleted, because a few hours later I noticed it had re-appeared on my page. I should also note that the account that originally sent me the images seems to no longer exist on Instagram.

Typically, I choose not to talk about the awful hate speech I am targeted with online publicly because I worry that calling out the anti-Semitism gives the bigots the megaphone they want and elevates their message of hate using my own platform. However, I’ve been trusting that Instagram actually deletes and takes down these hate spewing pages when they’re reported. It seems that something in that process is not working, so I feel compelled to share my experience with Instagram’s uneven and ineffective policing of hate speech publicly.

I have read a lot of explanations from Facebook, Instagram’s parent company, about why it’s so hard to regulate hate, citing the abundance of content on their platform and the inability of any machine to find every instance of hate in such a broad ecosystem. But setting aside that argument for a moment, look at what happens when someone like me does actually take the time to flag and report hate that the machines struggle to detect. They have to nail it then, right? Nope! And, in the experience I just had, Instagram failed to meet the standards it set for itself!

Except nipples. Instagram is always amazing at policing nipples.

While it’s easy to dismiss this kind of hateful rhetoric as silly memes spread by impotent trolls, it does have a real-world impact. In addition to maintaining a hate speech folder on my phone, I’ve had to call the police to speaking events my mom and I hosted because of threatening messages left on posts talking about the upcoming events. My experiences aside, look at how the violence we witnessed last week in America was fermented on social media. The pipe bomber actively spewed hate and conspiracy theories on social media and even threatened Democratic strategist Rochelle Ritchie on Twitter. When Ms. Ritchie reported the threat to Twitter, she was told that they found no violation of Twitter rules. The murderer who killed 11 Jewish people in a Pittsburgh house of worship this past Saturday while shouting “all Jews must die,” was active on social media and even posted about his intent to commit, according to the ADL, the single deadliest attack on the Jewish community in American history on Gab, a fringe social media platform.

Mark Zuckerberg has said, “In a lot of ways Facebook is more like a government than a traditional company,” and, in my opinion, it’s not an insane fantasy to consider Facebook and its owned platforms (colonies) like Instagram in this way. Facebook arguably has more power, data, and influence than most nations in the world, and it governs its citizens with “community guidelines” which are essentially laws. But unlike laws in the United States, for example, the people of Facebook nation have absolutely no say in writing/ hiring people to write the laws or even a real understanding of the mechanisms by which Facebook enforces those laws.

If a white supremacist adds a Jewish person to a group direct messaging thread full of Nazis and sends the message, “I’m going to kill you, you must die filthy Jew...Hitler was right”, and the Jewish person reports that experience to Instagram...what would happen next? The answers in Instagram’s community guidelines don’t provide clarity on how the process works, on who decides whether something is a violation, or even on how they make these rules. The description of the review process on Instagram is, “We have a global team that reviews these reports and works as quickly as possible to remove content that doesn’t meet our guidelines.” So, when I submit a hate speech report, does that mean a human reviews it? Does a robot? Is the “global team” just a room full of labradoodle puppies barking voice commands at a computer programmed to understand woofing intonations? I honestly don’t know, because there is very little transparency.

In America, we elect representatives to create laws and have a clearly defined process we can follow to seek and receive justice. On Facebook and Instagram, the only justice that can be enforced is kicking a violator off the platform. Because as all-powerful and all-knowing as Facebook nation has become, it can’t put you in Facebook jail. Facebook jail doesn’t exist.

Yet, an incitement to violence in the real world, like the hypothetical one I described, is arguably a felony in America.

I should take a moment here to say, I’m not writing this to try and be hateful or attack social media. I love, respect, and am frankly in awe of Instagram and Facebook; I believe these forms of media are a powerful connective force and have done a great deal of good for the world. The idea that a young person from the LGBTQIA community struggling with their sexuality in a small town with no one to talk to can press a button, and connect with a vast and supportive network of allies is incredible. On a more personal note, my entire career is inextricably tied to and derived from social media. Without Instagram, I may never have had the opportunity to realize my dream of becoming a published author. But while having a viral social media account that opens up a world of opportunity is hardly a universal experience, we are all increasingly involved with and dependent on social media in a number of aspects of our lives. Major corporations like Target invest big in their social media strategy, the average American spends over two hours a day on social media, and even if you’re someone who isn’t sure what a twitter is, the president of the United States makes official presidential statements on the platform, so like it or not, social media is an important force in the world.

Facebook, Instagram...can you please fix this so we can all go back to sharing pizza memes and cat videos? Or at the very least, provide a fulsome explanation of how the process is meant to work, so we can all try to fix it together as citizens of the beautiful and diverse Facebook Nation?

In a statement to ABC News regarding this column, a spokesperson for Instagram said, “We mistakenly removed this content and we apologize to @katefriedmansiegel for the error. The content has now been reinstated, and we have removed the offending account for violating Instagram’s Community Guidelines. We do not allow content that attacks people based on their race, ethnicity, national origin, religious affiliation, or their sexual orientation, caste, sex, gender, gender identity, and serious disease or disability. We will continue to remove content that violates our guidelines as soon as we're aware."

In a statement to ABC News regarding this column, Facebook said in part, "Our policies are only as good as the strength and accuracy of our enforcement – and our enforcement isn’t perfect. The challenge is accurately applying our policies to the content that has been flagged to us. In some cases, we make mistakes because our policies are not sufficiently clear to our content reviewers; when that’s the case, we work to fill those gaps. More often than not, however, we make mistakes because our processes involve people, and people are fallible. We know we need to do more. That’s why, over the coming year, we are going to build out the ability for people to appeal our decisions."

Twitter did not immediately return ABC News’ requests for comment.

Addendum from Kate Friedman-Siegel in response to Instagram’s statement: I am happy to learn that Instagram ultimately made the decision to remove the account that sent me a holocaust meme, but the fact that the initial decision I received ruled that the account didn’t violate community guidelines underscores the point of my op-ed. Was the initial decision an automated response? Was my report reviewed a second time for some reason? If so, what triggered a second look? Or was the account removed based on a different report that another user filed? Because we don’t have transparency, I find it difficult to have confidence in the review process.