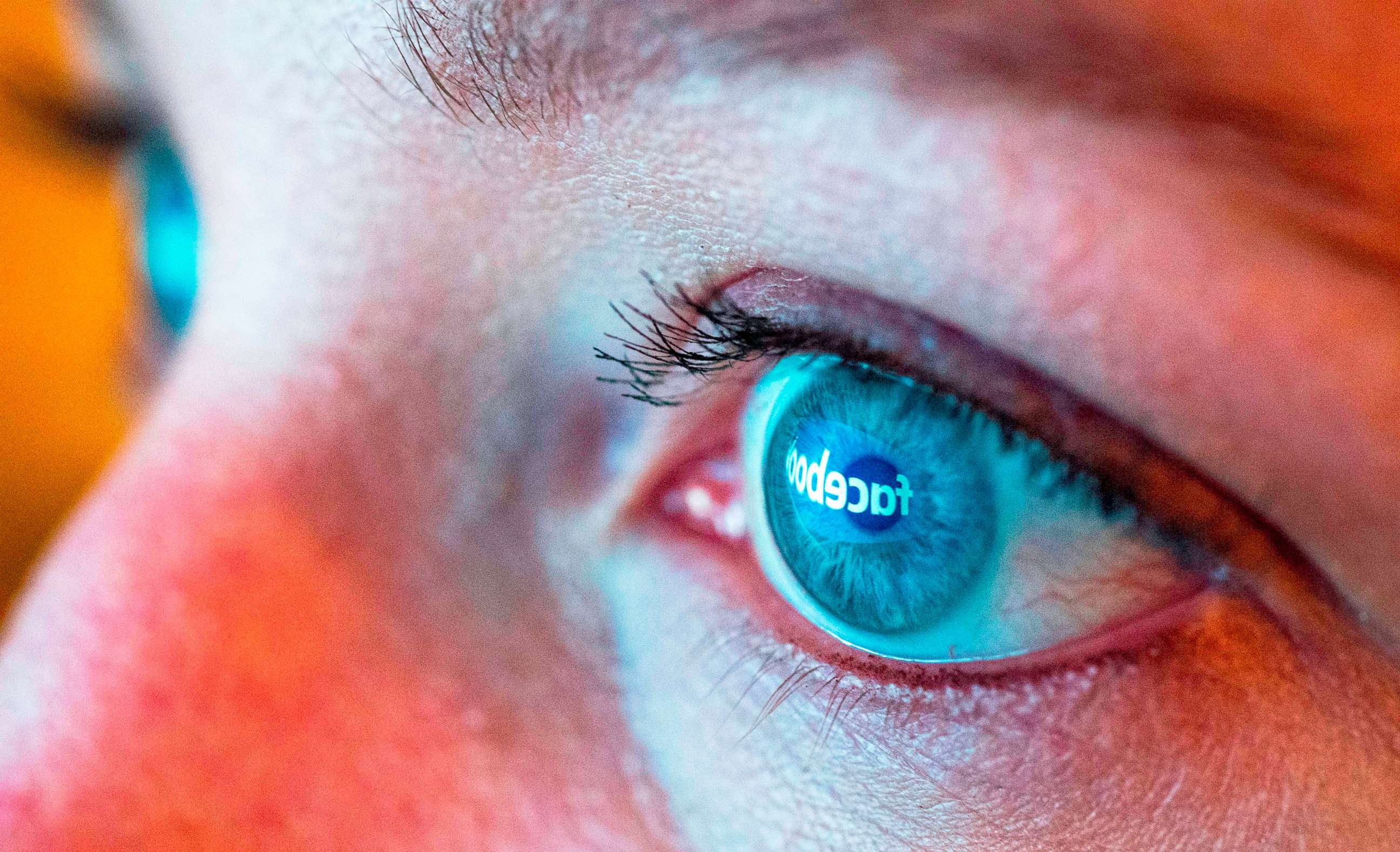

More people engage with verifiably false news outlets on Facebook now than in 2016

More people are now engaging with outlets on Facebook that repeatedly publish verifiably false content than in the lead-up to the 2016 election, new research shows.

These findings come despite a slew of new efforts from social media companies to combat the spread of misinformation on their platforms ahead of the 2020 presidential vote.

The level of engagement with articles from "false content producers" that masquerade as news organizations but repeatedly publish demonstrably false materials has increased 102% since 2016, according to a report published Monday by the think tank German Marshall Digital Fund in partnership with the firms NewsGuard and NewsWhip.

"What we were really trying to zero in on was something very simple -- how is it that we keep hearing all these reports about disinformation when the platforms have taken so many actions? There have been so many announcements to prevent disinformation," Karen Kornbluh, the director of the digital innovation and democracy initiative at the German Marshall Fund, told ABC News. "We realized there is a whole disinformation supply chain, which starts with these sites that mask as news outlets."

The researchers also found that the level of engagement with sites that don't necessarily mask as news outlets but repeatedly fail to present information responsibly has increased 293% in the run-up to the 2020 election compared to the 2016 presidential election. Examples of these sites, dubbed "manipulators," include Breitbart News, according to the researchers.

Moreover, interactions with both kinds of deceptive sites -- false content producers and manipulators -- have spiked 242% between the third quarter of 2016 and the third quarter of 2020, the researchers found.

Kornbluh noted that while overall engagement across all content increased during the timeframe they examined, "we see the disinformation sites increasing at greater rates than overall engagement," she said.

Kornbluh said they didn't expect to see increased interactions with the false content producers "but we saw it there, and then we saw an enormous increase in interactions with the manipulators" since 2016.

"That is an explanation for why you’re seeing so much disinformation," she said.

The researchers rated these outlets based on methodology developed by the startup NewsGuard, which ranks the credibility of outlets that claim to be journalism based on nine factors.

Kornbluh said it can be hard initially for regular users to tell the difference between these false content producers, manipulators and credible news organizations, which is in part why the misinformation spreads so fast.

If you see a piece of news or a headline on Facebook, "one thing you need to check is, what is the outlet this is coming from?" she said. "What are they using to make this claim? And really probing what's the evidence behind this."

Ultimately, however, she said "there needs to be actions to change the incentives of the platforms."

"Just like the car companies in the early days, it wasn't in their incentives to put in a seat belt, and we didn't ask the individuals to install seat belts," she said.

Social media companies need to implement policy and internal changes to better protect users from misinformation, she argued.

As for Facebook, "what they need to do is instead of playing wack-a-mole with individual pieces of content, they need to look at the disinformation supply chain," she said, "and how they can disrupt this supply chain and not amplify that."

A Facebook spokesperson told ABC News in a statement that "engagement does not capture what most people actually see on Facebook."

"Using it to draw conclusions about the progress we’ve made in limiting misinformation and promoting authoritative sources of information since 2016 is misleading," the spokesperson added. "Over the past four years we've built the largest fact-checking network of any platform, made investments in highlighting original, informative reporting, and changed our products to ensure fewer people see false information and are made aware of it when they do."