What to know about Instagram's new anti-bullying feature with AI technology

Two major changes are coming to Instagram meant to address bullying.

The social platform is rolling out two new features, including one powered by artificial intelligence that notifies people when their comment may be considered offensive before it's even posted.

"While identifying and removing bullying on Instagram is important, we also need to empower our community to stand up to this kind of behavior," Adam Mosseri, Head of Instagram said in a post. "It’s our responsibility to create a safe environment on Instagram."

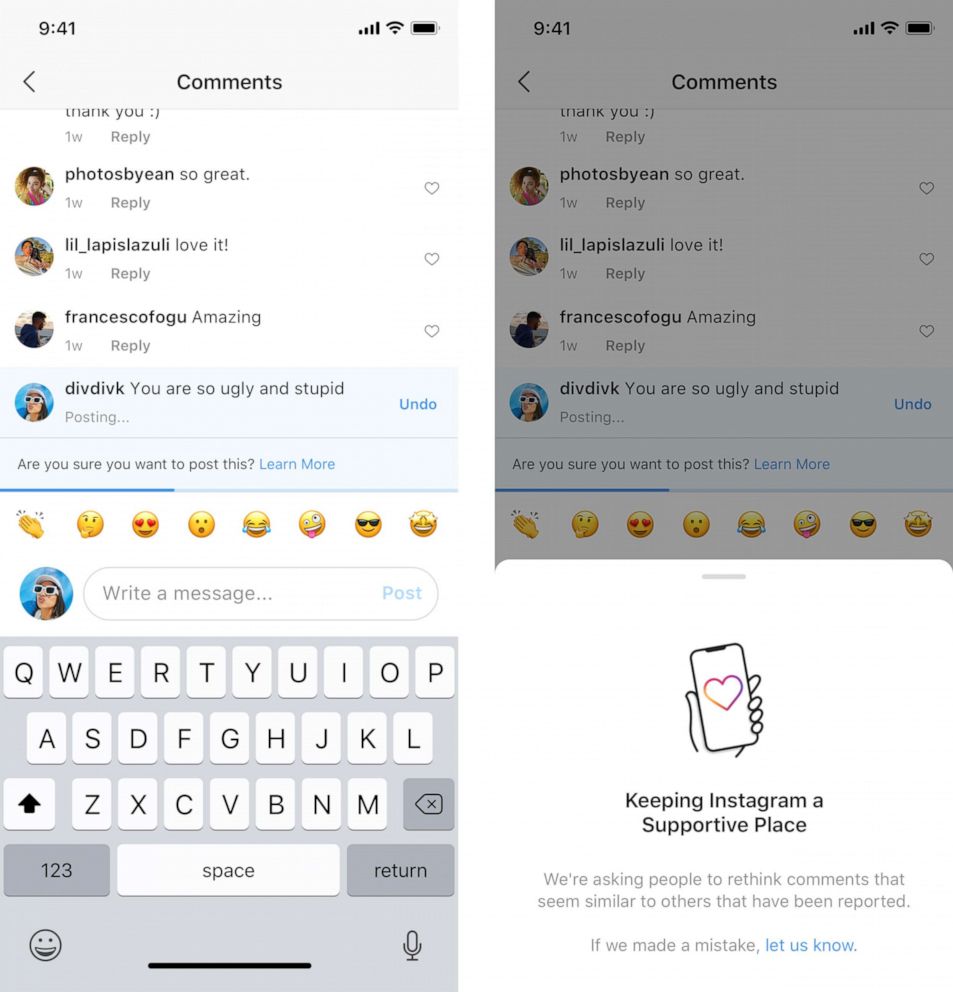

The first new feature forces users to take a beat before posting something offensive.

Here’s how it works: If someone’s about to post a mean comment - for example “you are so ugly and stupid," they will get an instant in-app pop-up notification asking, “are you sure you want to post this?”

Powered by AI, Mosseri writes, "this intervention gives people a chance to reflect and undo their comment and prevents the recipient from receiving the harmful comment notification."

Instagram says they’ve run early tests of this new feature and found at least some people delete those negative comments, instead of posting.

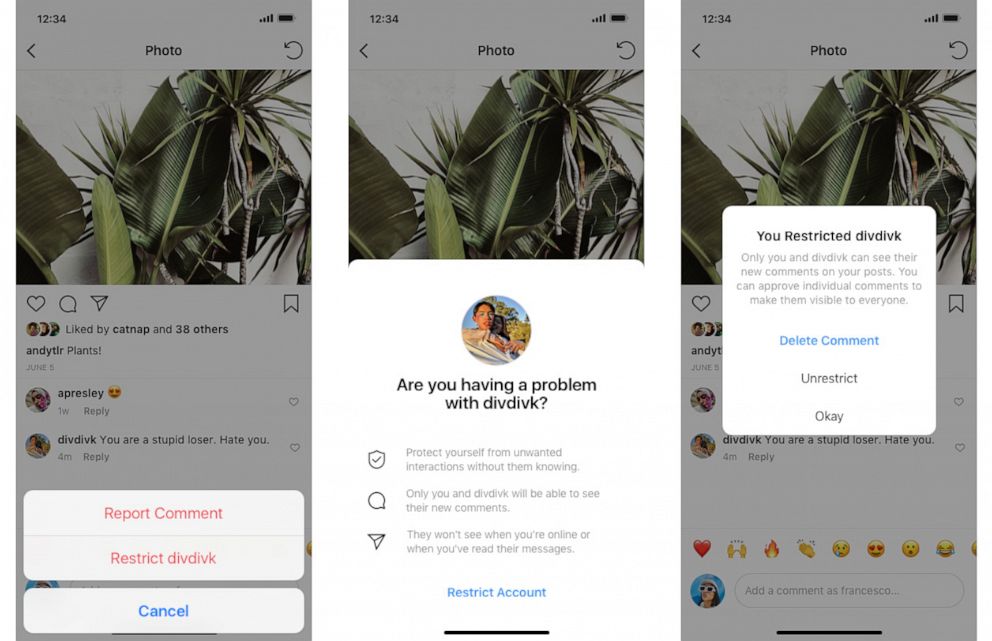

The second new feature called “Restrict.”

It's meant to help users quietly block bullies who they might be reluctant to unfollow or report because of the real life consequences.

Once you "restrict" someone, comments on your posts from that person will only be visible to that person. They also won’t be able to see when you’re active on Instagram or when you’ve read their direct messages.

Instagram realizes bullying can be a complex issue.

A toxic user experience can also drive brands away from their platform, not just everyday users. In the long run, not addressing these sort of issues across the ever-growing Instagram community can have not only a detrimental but also a financial impact on the overall operation.